The EU AI Act is reshaping global AI regulations, and US companies must pay attention. Here's why:

- The Act applies to any company - regardless of location - that markets AI systems in the EU or whose AI outputs are used there.

- Non-compliance risks include fines of up to €35 million or 7% of global annual revenue.

- The Act categorizes AI systems by risk (minimal, limited, high, and unacceptable) and sets strict obligations for high-risk systems.

- Key deadlines start as early as 2 February 2025, with full compliance for most high-risk systems required by 2 August 2026.

For US businesses, aligning with these rules isn't optional. The EU's market size and influence mean these standards are becoming a global benchmark. Immediate steps include building an AI governance system, revising contracts, and establishing ongoing compliance monitoring.

Understanding the EU AI Act: Key Facts and Compliance Requirements | AI Literacy Series

sbb-itb-e314c3b

Who Must Comply: Determining If the EU AI Act Applies to Your Business

The EU AI Act is making waves far beyond Europe's borders, and understanding whether it applies to your business is critical - especially if you're operating in the U.S. Here's a closer look at how your activities might trigger compliance.

Business Activities That Trigger Compliance

The Act's scope isn't limited to where a company is based. Instead, it zeroes in on how AI systems function and their impact. As KPMG puts it:

"The EU AI Act is applicable to many U.S. companies, potentially even including those with no physical EU presence." [6]

So, what triggers compliance? If you're selling AI products or services in the EU, offering AI-driven software through e-commerce platforms, or providing general-purpose AI models to European clients, you're in the Act's crosshairs. Even if your AI system operates from U.S.-based servers, it falls under the Act if its outputs - like predictions, recommendations, or decisions - are used in the EU. For example, a U.S. recruitment platform screening CVs for a German office or a credit scoring algorithm evaluating EU residents' loan applications would need to comply [6].

Processing EU residents' personal data is another big trigger. Whether you're analyzing this data for marketing, risk assessments, or customer service, the Act applies. Additionally, if your AI technology is embedded in a product sold by an EU-based company, compliance becomes mandatory [6].

Direct vs. Indirect Compliance Requirements

What you need to do to comply depends on your role in the AI value chain. Here’s how it breaks down:

- Providers: If you develop AI systems and place them on the EU market under your own name, you face the most stringent rules. Think conformity assessments, risk management systems, and detailed technical documentation [6].

- Deployers: Businesses that use AI systems under their authority have a different set of responsibilities. These include monitoring system performance, training staff on how the AI works, and notifying authorities about serious incidents if the AI is used in the EU or its outputs reach EU users [3].

- Importers and Distributors: If you're bringing AI systems from the U.S. into the EU, you must verify compliance, check for CE markings, and maintain records to prove everything is in order [4].

There’s also a critical distinction to keep in mind: if you rebrand or heavily modify an AI system, you might shift from being a deployer to a provider. This change brings stricter obligations. As Vivien F. Peaden from Baker Donelson explains:

"A deployer could be re-classified as the 'provider' of high-risk AI system(s) if it operates the AI systems under the deployer's own name and brand, or otherwise modifies the AI systems for unintended purposes." [3]

For U.S. developers of general-purpose AI models, the risks are also significant. If a third party uses your model and makes it available in the EU, you could be classified as a provider - unless you’ve explicitly prohibited its distribution in the EU [8].

Understanding these roles and triggers is key to navigating the EU AI Act’s requirements effectively.

The 4 Risk Categories: How the EU AI Act Classifies AI Systems

The EU AI Act organizes AI systems into four risk levels - minimal, limited, high, and unacceptable - to establish how much regulation each category requires. As explained by Mario F. Ayoub, Jessica L. Copeland, and Sarah Jiva from Bond Schoeneck & King PLLC:

"The Act takes a risk based approach, with obligations varying based on whether an AI system is considered to pose minimal, limited, high or unacceptable risk." [7]

This structure helps companies understand the compliance measures necessary for their AI systems. Here's a closer look at each category.

Unacceptable Risk: Banned AI Systems

Some AI applications are considered too harmful for use in the EU and will be prohibited starting 2 February 2025 [7]. These include systems that manipulate behavior through subliminal techniques, exploit vulnerable groups, or use social scoring. Other banned uses involve untargeted scraping of facial images from online platforms or CCTV footage to create recognition databases, as well as predictive policing systems that profile individuals based on innate characteristics. Real-time biometric identification in public spaces is also prohibited, except for narrow law enforcement applications, such as finding missing children or preventing an imminent terrorist attack [6].

Companies using these technologies must cease operations immediately or face steep penalties - up to €35 million or 7% of their global annual revenue [10].

High Risk: Requirements for Critical AI Applications

While the Act bans the most dangerous systems, it allows high-risk AI under strict regulations. According to KPMG:

"High-risk AI systems create an elevated risk to the health and safety or rights of the consumer. Such systems are permitted on the EU market subject to compliance with certain mandatory requirements and a conformity assessment." [6]

High-risk systems fall into two groups. The first includes AI integrated with regulated products, such as medical devices, vehicles, toys, and machinery, which already fall under EU health and safety laws [11]. The second group covers AI used in sensitive areas like recruitment, credit scoring, critical infrastructure (e.g., water and energy systems), education admissions, law enforcement, border control, and judicial administration [9].

Providers of high-risk systems must meet rigorous requirements before launching their products. These include implementing risk management systems, ensuring data governance to reduce bias, maintaining technical documentation, enabling human oversight, and logging decisions automatically [12]. Additionally, these systems must be registered in a dedicated EU database and undergo conformity assessments. Non-compliance could result in fines of up to €15 million or 3% of global annual revenue. Most high-risk systems must comply by 2 August 2026 [10].

However, not all AI systems listed in Annex III fall under high-risk regulations. If a system only performs narrow tasks, supports human decisions, or handles preparatory work - like formatting CVs rather than screening or ranking candidates - it is exempt from these requirements.

Limited and Minimal Risk: Lighter Requirements and Exemptions

AI systems posing lower risks have fewer obligations. Limited-risk systems must meet transparency requirements, such as chatbots disclosing their non-human nature or AI-generated content being clearly labeled as synthetic. On the other hand, minimal-risk systems, which include tools like spam filters or AI-driven video games, face no mandatory regulations [6].

While the Act encourages voluntary codes of conduct for these systems, compliance remains optional [6]. Minimal-risk systems make up the majority of AI applications in use today, benefiting from these lighter requirements.

Key Dates and Penalties: What Happens If You Don't Comply

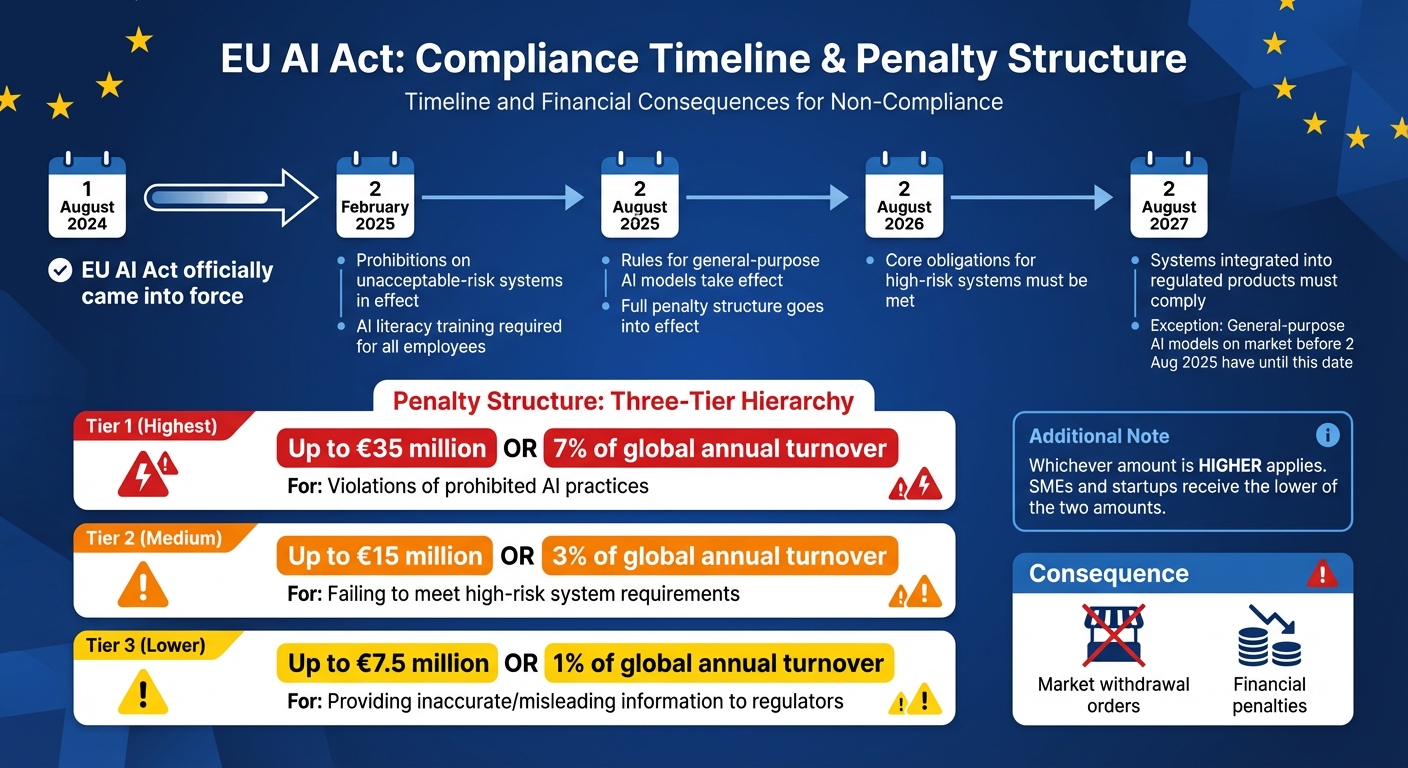

EU AI Act Compliance Timeline and Penalty Structure for US Companies

For US companies dealing with the Brussels Effect, staying on top of the EU AI Act's deadlines and penalties is critical. The Act officially came into force on 1 August 2024 [15][17]. Missing these deadlines can lead to hefty fines and restrictions on market access.

Compliance Deadlines You Need to Know

The EU AI Act uses a phased timeline based on the risk level of AI systems. Here's how the key dates break down:

- 2 February 2025: Prohibitions on "unacceptable-risk" systems, like social scoring or workplace emotion recognition, are already in effect. Companies using these technologies must stop immediately [7][20].

- 2 August 2025: Rules for general-purpose AI models and the full penalty structure go into effect [5][7].

- 2 August 2026: Core obligations for high-risk systems must be met by this date.

- 2 August 2027: Systems integrated into regulated products must comply by this point [5][7].

There’s a notable exception for providers of general-purpose AI. If their models were already on the market before 2 August 2025, they have until 2 August 2027 to comply [16][17]. Additionally, starting 2 February 2025, companies must ensure all employees involved in AI operations receive proper "AI literacy" training [16].

These deadlines are firm, with no grace periods. Once you understand the timeline, it's equally important to grasp the penalties for non-compliance.

Financial Penalties and Market Restrictions

The EU AI Act enforces a three-tier penalty system. Fines are calculated as either a fixed amount or a percentage of global annual revenue - whichever is higher [18][19]. Here's how the penalties stack up:

- Up to €35 million or 7% of global annual turnover for violations of prohibited AI practices [5][20].

- Up to €15 million or 3% of global turnover for failing to meet requirements for high-risk systems [5].

- Up to €7.5 million or 1% of global turnover for providing inaccurate or misleading information to regulators [20].

Beyond fines, non-compliant companies may face orders to withdraw their AI systems from the EU market [5][20]. For smaller businesses like SMEs and startups, the lower of the two penalty amounts (fixed or percentage) is applied, offering some relief [18].

These penalties, combined with strict deadlines, underline the importance of compliance for any US company operating in the EU. Ignoring these requirements isn’t an option.

How to Prepare: Steps US Companies Should Take Now

For US companies, aligning with the EU AI Act requires immediate action. Breaking the process into clear steps - like building a governance system, revising contracts, and setting up ongoing monitoring - can make compliance more manageable.

Building an AI Governance System

Start by cataloging every AI system your company uses. This includes systems developed in-house, those licensed from vendors, and even AI embedded in products you sell. Once listed, classify each system into one of four risk categories: Unacceptable, High, Limited, or Minimal [6][4][21]. There are over 138 examples of high-risk AI use cases across industries like healthcare, finance, and recruitment [2].

Next, determine your company’s role under the Act. Are you a provider (creating AI), deployer (using AI), importer (bringing AI into the EU), or distributor (reselling AI)? Each role comes with specific legal responsibilities [5][4][22].

After mapping out your systems and roles, assign oversight personnel to monitor AI usage, ensure human involvement, and address deviations promptly [5][2]. High-risk systems require a risk management system to identify and minimize foreseeable risks [4][2]. This includes ensuring training, validation, and testing datasets are unbiased and representative [5][2]. As KPMG highlights:

"The chief information security officer (CISO) is a key senior role that will be most affected by the EU AI Act, as it will impact how businesses develop and deploy AI technologies and secure their data." [6]

To strengthen governance, align internal frameworks with standards like ISO 42001, the NIST AI Risk Management Framework, or OECD guidelines [6][23]. If managing multiple AI systems, automated detection tools can simplify the inventory process [6].

Once your governance system is in place, update legal and technical frameworks to reflect these changes.

Revising Contracts and Technical Documentation

Update contracts and documentation to meet EU AI Act requirements. Audit procurement contracts with vendors to ensure they provide necessary technical documentation and usage instructions for high-risk systems [5][14]. Clearly define roles and liabilities in service agreements - whether you’re a provider or deployer [5][2]. For third-party AI models, confirm the training data is accurate and that you hold the required intellectual property and privacy rights [2].

For high-risk systems, ensure technical documentation includes auditable logs, risk management descriptions, and records of training and testing processes [5][4]. Mayer Brown advises:

"The expense to generate technical documentation is relatively small if built into the AI tool from the beginning." [2]

Integrate logging and metadata tracking into your AI systems to monitor performance in real time. This includes checking for fairness, accuracy, bias, and cybersecurity risks [2].

Update your Service Level Agreements (SLAs) to include post-market monitoring clauses. Vendors should be required to report serious incidents or model drift that could affect compliance [5][4][2]. If you’re providing high-risk systems, you must supply deployers with detailed instructions on system limitations and human oversight requirements [14]. Transparency reports outlining system capabilities and limitations are also essential [2].

With contracts and documentation in order, the next step is to focus on maintaining compliance.

Maintaining Compliance Through Regular Reviews

Continuous review is critical for staying compliant. The EU AI Act mandates post-market monitoring for high-risk systems, requiring companies to collect and analyze performance data over the system’s lifetime [5][4]. A key challenge here is model drift, where AI models lose accuracy or fairness over time [2]. Mayer Brown explains:

"The EU Act calls for testing, monitoring, and auditing, pre- and post-deployment of the high-risk AI... The reason underpinning this requirement is the issue of model drift." [2]

Embed real-time monitoring into your AI systems. Set up processes to test for accuracy, bias, and model drift regularly [2]. Document any performance issues or incidents, as these records are vital for demonstrating accountability to regulators [5][4]. High-risk systems should also include a "kill switch" to shut them down if compliance cannot be restored [2].

Conduct regular gap analyses to compare your policies against the Act’s requirements and address any shortcomings before deadlines arrive [6][23][21]. Use tools like the IAPP Global AI Law and Policy Tracker or the EU AI Act Compliance Matrix to stay informed about regulatory updates. For companies managing multiple AI systems, automated solutions can simplify compliance tracking and workflow management. As KPMG notes:

"Leveraging an automated solution to manage various aspects of compliance mapping, obligations tracking, and workflow management can aid in supporting and scaling various governance activities." [6]

US companies can also join the European Commission’s “AI Pact,” a voluntary initiative to adopt key obligations ahead of enforcement. This proactive step not only reduces compliance risks but also positions your company as a leader in responsible AI practices.

Conclusion: Using EU AI Regulations as a Business Advantage

The EU AI Act offers a chance for forward-thinking US companies to stay ahead. Complying early ensures smooth access to a consumer base of 450 million while outpacing competitors who delay [25][26].

As Peter Stockburger, Office Managing Partner at Dentons, puts it:

"Organizations that do it right can take advantage of market share and address the growing needs of customers, partners, and regulators, which are increasingly looking to organizations to only develop and deploy AI in a responsible, safe, and ethical manner." [24]

Operating under a unified, EU-compliant model is not only cost-efficient but also shields businesses from the complexity of navigating varying standards. It also prepares them for the growing wave of US state laws that echo EU principles. By 2025, roughly 1,000 AI-related bills are expected to be in motion across US states, making the EU framework a reliable baseline for compliance [1][13][25][26]. The financial and regulatory stability this provides outweighs the risks of falling behind.

Failing to comply comes with steep penalties, potential loss of market access, and diminished trust [10][25]. Additionally, global B2B partners are increasingly scrutinizing vendors to ensure they have strong data governance measures in place before forming partnerships [25].

By adopting the Act's risk-based approach, US companies can position themselves as leaders in global AI governance rather than playing catch-up. The Center for AI Policy highlights this point:

"If the US remains at its current political impasse regarding AI policy, its companies will be effectively regulated by the EU." [1]

Early adopters stand to gain a distinct advantage by demonstrating a commitment to ethical AI practices. This not only helps capture market share from slower competitors but also builds trust with customers worldwide. The EU AI Act is setting the benchmark for responsible AI practices, and companies that act now will shape the future of AI governance instead of scrambling to adapt later. By taking this proactive step, US companies secure their place in the global market while leading the charge in responsible AI development.

FAQs

Do we fall under the EU AI Act even with no EU office?

Yes, U.S. companies could still be subject to the EU AI Act even if they don’t have an office in the EU. The regulation applies to AI systems that affect individuals or markets within the EU, thanks to its extraterritorial scope. In other words, if a company targets or operates in the EU, compliance is required, regardless of whether they have a physical presence there.

How do we know if our AI is “high-risk” or exempt?

To figure out whether your AI system falls under the high-risk category in the EU AI Act, you’ll need to examine its intended purpose, the sector it operates in, and its potential impact. These are the main factors laid out in the Act’s guidelines for identifying high-risk systems. For a deeper understanding, check out resources that break down these classifications and what they mean for meeting compliance requirements.

What should we do first to be ready before 02/02/2025?

To gear up for the EU AI Act taking effect on 2 February 2025, start by getting familiar with its scope and requirements. The first step is determining whether your AI systems are covered by the regulation, particularly if they have an impact on the EU market.

Next, identify your position in the AI value chain - are you a provider, user, or importer? Each role comes with specific responsibilities, so understanding where you fit is crucial.

Once that’s clear, focus on developing compliance strategies. This includes implementing thorough risk management processes, maintaining detailed documentation, and ensuring your systems meet the necessary standards. If you’re unsure about any aspect, it’s a good idea to consult with experts to navigate the complexities of the regulation effectively.