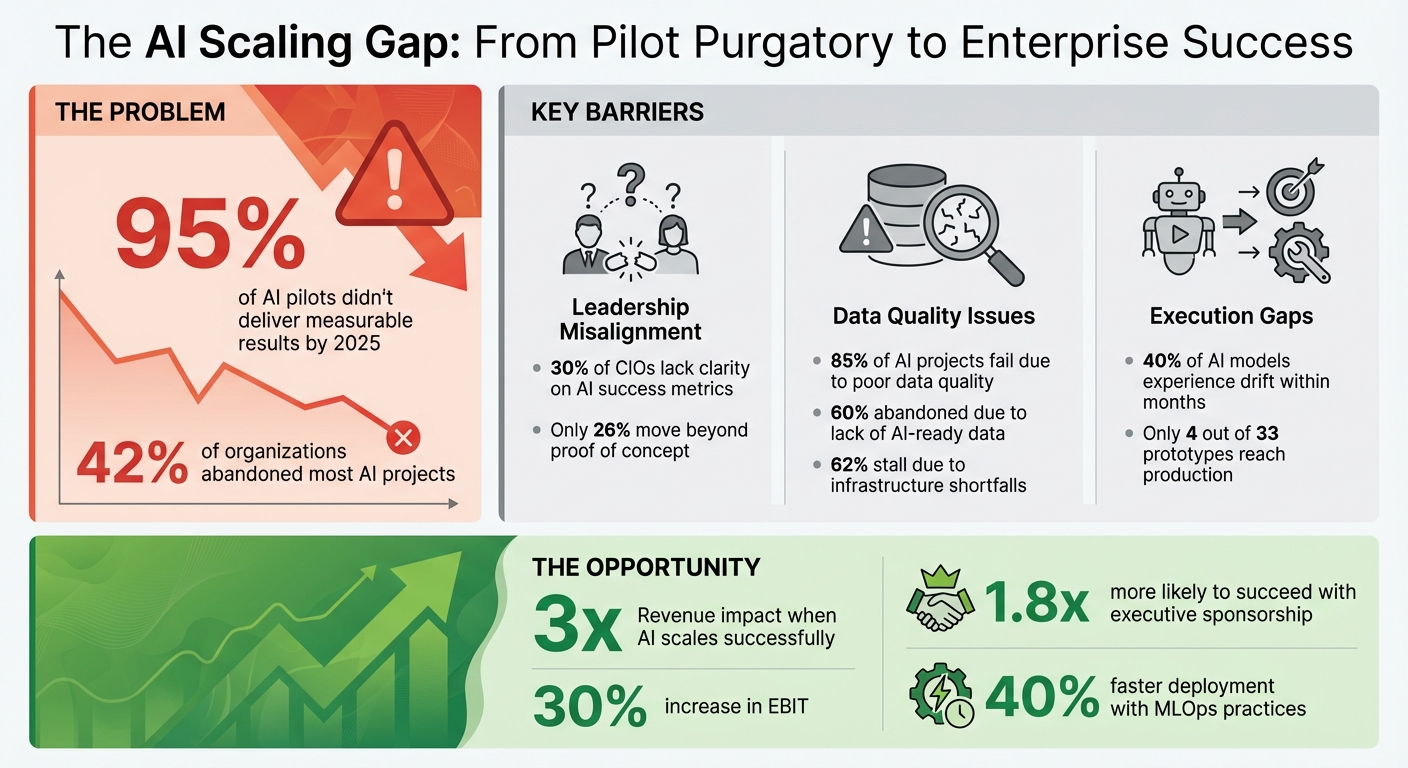

Stuck in AI pilot mode? You’re not alone. Most companies fail to turn promising AI experiments into real business impact. In fact, by 2025, 95% of AI pilots didn’t deliver measurable results, and 42% of organizations abandoned most of their AI projects.

Here’s the problem: AI pilots often work in controlled environments but collapse when faced with messy, real-world data or when they lack proper integration into business operations. Companies lose millions of euros, while competitors move faster, scaling AI to boost revenue and efficiency.

The solution? A clear path to scale AI effectively:

- Align leadership with AI goals: Tie AI projects to specific business outcomes, like cost reduction or revenue growth.

- Fix data issues: Build strong, reliable data pipelines to handle messy production data.

- Adopt MLOps: Use automation to ensure AI models stay accurate and operational over time.

- Drive team collaboration: Engage employees by showing how AI improves their daily work.

Why it matters: Scaling AI can triple revenue impact and increase EBIT by 30%, but only if businesses move beyond isolated experiments and focus on execution. This guide breaks down how to do it, with practical steps, real examples, and tools to make AI work for your organization.

AI Pilot Failure Statistics and Scaling Success Metrics

Beyond AI Islands: How to Scale AI Across the Enterprise

sbb-itb-e314c3b

Common Barriers to Scaling AI

To understand why AI projects often struggle to progress, it's crucial to identify the main obstacles. Three key issues frequently keep organisations stuck in the pilot phase: a disconnect between leadership and AI goals, challenges with data infrastructure, and execution shortfalls. These hurdles can even derail technically sound projects before they reach full-scale implementation.

Misalignment Between Leadership and AI Goals

The most critical challenge is strategic misalignment. AI initiatives often falter when treated as isolated IT experiments rather than as part of a broader business transformation. Alarmingly, nearly 30% of CIOs admit they lack clarity on the success metrics for their AI proofs-of-concept. When leadership focuses solely on technical accuracy without linking it to measurable business outcomes, projects are set up for failure. If leaders can’t clearly explain how an AI project will cut costs, boost revenue, or resolve a bottleneck, funding dries up. Unsurprisingly, only 26% of organisations manage to move beyond proofs of concept to generate tangible business results.

"Scaling AI is a business transformation journey, not an IT project. Companies that tie AI metrics to business KPIs succeed ten times more often."

– Thomas H. Davenport, Babson College Professor

Executive sponsorship plays a pivotal role. Organisations with dedicated executive sponsors are 1.8 times more likely to scale AI successfully. Without this top-level commitment, projects often lose momentum when they face the inevitable challenges of production deployment. This lack of alignment at the leadership level is a major reason AI initiatives fail to progress beyond experimental stages.

Data Access and Quality Problems

Another major stumbling block is the gap between pilot data and real-world production data. Pilots often use clean, curated datasets - perhaps 1,000 neatly formatted records. But production environments require managing millions of messy records, riddled with typos, incomplete fields, and data inconsistencies as market conditions evolve. Poor data quality causes 85% of AI projects to fail, and 60% are abandoned due to a lack of AI-ready data - structured, governed, and regularly updated datasets. Data silos exacerbate the issue, with marketing's customer data often disconnected from operations' service records, for example.

Scaling AI demands strong data pipelines - systems that ensure data flows reliably from source to AI models in real time. Yet, 62% of AI projects stall due to infrastructure shortfalls, including underdeveloped data engineering and security challenges. Many organisations delay progress by attempting large-scale, multi-year data lake projects instead of focusing on targeted pipelines for high-priority use cases. Without fixing these foundational data issues, AI initiatives struggle to move from pilot to production.

Gaps in Execution and Governance

Execution failures are another significant barrier. One common issue is the "ownership dilemma": pilots are often managed by innovation labs or IT teams that aren’t responsible for the operational outcomes. This leads to challenges when operational teams inherit systems they didn’t help design, resulting in maintenance gaps and workflow mismatches. The result? Teams revert to familiar tools, and the AI project stalls.

A lack of mature Machine Learning Operations (MLOps) frameworks is another critical problem. Without automated systems for version control, continuous monitoring, and retraining, AI models degrade quickly as market conditions change.

"AI pilots fail because they lack production readiness. MLOps is no longer optional - it's the foundation for reliability, compliance, and trust."

– Dr. Michael I. Jordan, UC Berkeley Researcher

A real-world example brings these challenges to life: In 2022, Ford Motor Company's commercial vehicle division invested millions of euros in an AI system designed to predict vehicle failures 10 days in advance using real-time sensor data. While the pilot successfully predicted 22% of certain failures, the project stalled because it couldn’t integrate with the company’s legacy service systems and faced inconsistent adoption across its dealership network. The AI itself worked, but the surrounding systems and processes did not. These execution and governance gaps are a major reason why many organisations fail to achieve enterprise-wide AI implementation.

Strategies for Escaping Pilot Purgatory

Breaking out of pilot purgatory means tackling weak data systems and outdated practices head-on. The key isn't just about building better AI models; it's about building the right systems around them. Here's how to move from isolated AI experiments to full-scale enterprise deployment.

Building Strong Data Foundations

Data preparation can't be an afterthought. AI success starts with structured, well-governed, and continuously updated datasets. This process involves five key stages:

- Ingestion: Use schema validators to detect changes within minutes.

- Transformation: Clean and mask sensitive data.

- Governance: Track data lineage to meet regulatory requirements.

- Serving: Enable real-time access through APIs and microservices.

- Feedback Loops: Capture errors to improve retraining.

Each stage is vital for avoiding failures that derail scaling efforts. For example, SNCF Gares&Connexions implemented a digital twin across 3,000 train stations in 2024 using NVIDIA Omniverse. This approach ensured 100% on-time preventive maintenance and cut issue response times by half. Their success came from focusing on data quality through automated contracts and validators.

"Data remains the biggest challenge and opportunity... data quality needs to be managed at the source."

– Bain & Company

The financial stakes are high. Data platforms often drive unexpected AI costs, with organizations underestimating scaling expenses by 250% to 400%. Poor data quality is a common culprit, causing 85% of AI projects to fail and 60% to be abandoned. Solutions like automated schema validation, modular architecture to avoid vendor lock-in, and regulatory-friendly data lineage can prevent these pitfalls.

Once data pipelines are in place, the next step is ensuring model reliability through MLOps.

Using MLOps for Scalability

MLOps (Machine Learning Operations) bridges the gap between experimental AI and operational scalability. Without it, 40% of AI models experience drift within months, and only 4 out of 33 prototypes make it to production.

The key is to treat models like software. This includes:

- Version control for code and datasets.

- Automated testing pipelines.

- Continuous monitoring.

- Centralized model registries for easy rollbacks.

Organizations that adopt MLOps practices reduce model deployment time by 40%. For instance, a Fortune 500 bank in 2025 used MLOps pipelines to monitor its fraud detection AI. When model drift hit a 10% threshold, automated retraining reduced false positives by 30%, saving millions in manual review costs. Automation also freed data scientists from constant oversight.

"MLOps emerged to address this primary roadblock of transitioning models from development to production."

– Gartner

Start small during the pilot phase with version control, reproducible environments, and automated CI/CD pipelines. Build data observability to handle the messy, incomplete data that production environments throw at you. These steps help bridge the gap between experimental AI and real-world application.

Driving Change Management and Team Collaboration

Scaling AI isn't just a technological challenge - it’s also an organizational one. Success requires both leadership and team buy-in. Leadership sets strategy and provides resources, while teams contribute domain expertise and practical solutions. The reality is that 70% to 90% of enterprise AI initiatives stall not because of technical issues, but because of organizational friction.

A federated hub-and-spoke model works best. A central Centre of Excellence sets standards and maintains platforms, while embedded teams use their domain knowledge to develop specific solutions. This approach avoids the trap of "ivory tower" models that don’t align with actual workflows.

Adoption happens when AI tools make a tangible difference in daily work. For example, B2B professionals using AI tools report saving at least one workday per week. Position AI as a tool to handle routine tasks, allowing employees to focus on complex, high-value work. Peer advocates (one for every 8–10 employees) can host office hours and share practical examples to build trust and familiarity.

"Adoption doesn't start with town-hall applause or pulse-survey scores. It starts when someone feels an unmistakable boost in their own day."

– Superhuman Team

To drive this shift, update performance metrics and incentives to reflect AI-augmented workflows. If employees are still rewarded for outdated volume-based metrics, they'll resist tools that emphasize judgment-heavy tasks. Conduct a "bottleneck blitz" to identify and eliminate legacy processes that slow AI adoption. Use a value-to-complexity matrix to prioritize AI projects with real business impact, avoiding flashy but ineffective initiatives.

Case Studies: Successful AI Scaling in Practice

Examples from various industries highlight how companies have moved beyond pilot projects to fully integrate AI into their operations, transforming key business functions along the way.

Insurance Industry: Automating Claims Processing

In the insurance sector, companies like Aviva and Allianz Partners have demonstrated how AI can revolutionize claims processing.

Aviva, the UK's largest general insurer, implemented over 80 AI models within its claims department between 2023 and 2024. Under the leadership of Chief Claims Officer Waseem Malik, the company invested 40,000 hours in staff training to cultivate a digital-first mindset. The results were striking: liability assessment times dropped by 23 days, customer complaints fell by 65%, and the company saw a sevenfold improvement in its Net Promoter Score.

"Aviva's leadership had extreme conviction that contrary to conventional belief, they could improve customer experience, efficiency, and accuracy in parallel, if they adopted a domain-wide approach."

– Sid Kamath, Partner, McKinsey

Meanwhile, Allianz Partners took a daring step in late 2025 by skipping traditional proof-of-concept stages. Instead, CEO Tomas Kunzmann and Chief Data Officer Pieter Viljoen launched autonomous automation directly into production across the UK and DACH markets. Kunzmann explained the urgency: "Time was against us. If we didn't act quickly, we risked losing half of our value chain - we needed to move immediately to protect our margins." Their bold move reduced claims processing times from 29 days to just 3.5 days, with a projected €300 million in annual profit gains by 2027.

Other insurers also achieved impressive results:

- Eastern Alliance used AI agents for document processing, reducing turnaround times from 5 days to just 1 hour, saving over 2,700 human hours.

- State Farm deployed machine learning for fraud detection, cutting fraud rates by 30% in the first year.

| Organisation | AI Application | Key Outcome |

|---|---|---|

| Aviva | End-to-end claims journey | 23-day reduction in liability assessment; 65% fewer complaints |

| Allianz Partners | Autonomous automation for claims | Handling time reduced from 29 days to 3.5 days |

| Eastern Alliance | AI agents for document processing | Processing time cut from 5 days to 1 hour |

| State Farm | Machine learning for fraud detection | 30% reduction in fraud in year one |

These examples from the insurance industry underline how AI can deliver measurable improvements in efficiency, accuracy, and customer satisfaction.

Retail Sector: Personalising Customer Experiences

Retail has also embraced AI to redefine how businesses engage with customers. For instance, Walmart scaled its proprietary "Wallaby" large-language model across its entire enterprise in 2025. This AI tool supports shop floor associates with real-time merchandising decisions and customer service inquiries, shifting the focus from operational efficiency to creating highly responsive and personalized customer experiences.

The financial benefits of such personalization are compelling. Retailers using AI-powered conversational shopping assistants have seen conversion rates rise by at least 7 percentage points. Some initiatives have delivered returns up to 24 times their initial investment. Additionally, automating tasks like marketing collateral creation - spanning translations, social media posts, and personalized landing pages - has resulted in productivity improvements of 30% to 40%.

One key takeaway for retailers? Avoid spreading resources too thin by deploying AI across every business unit. Instead, prioritize areas where scaling AI delivers the highest measurable returns. Using modular technical systems can also streamline updates, allowing improvements in one use case to cascade efficiently across multiple applications. This approach not only accelerates development but also reduces costs.

These lessons from retail showcase how targeted and thoughtful AI scaling can drive both financial and operational success.

Building an Enterprise AI Infrastructure

Once data and operational challenges are addressed, the next step is to establish a scalable AI framework that supports enterprise-wide transformation. Moving AI from small-scale pilots to full-scale implementation requires a strong technical foundation capable of sustaining long-term success.

AI Factory Model for Standardised Deployment

The AI Factory approach standardises AI services, enabling consistent and scalable deployment. Instead of treating each AI initiative as a one-off experiment, this model creates a system for deploying AI services across the organisation in a repeatable, efficient manner. It shifts the focus from scattered pilot projects to fully operational services with clear ownership, defined contracts, and controlled costs.

The factory model operates through three key layers:

- Studio: This is the design hub where teams create service blueprints, select suitable models, and ensure quality engineering before deployment.

- Runtime: This layer oversees production operations, including identity verification, authorisation, policy enforcement, and monitoring. Its primary goal is to ensure AI systems operate safely and responsibly.

- Productised AI Services: These are reusable components, like tools for incident triage or test case generation, that teams can access without needing to rebuild foundational systems [32,35].

"Intelligence is easy to demo. Operability is hard to industrialise." – Raktim Singh, Enterprise AI Strategist

To ensure smooth and accountable AI deployment, organisations should establish "paved roads", or preconfigured pathways, that guide teams toward safe and efficient implementation. This approach helps manage AI operations effectively while preventing uncontrolled growth of AI agents [32,35].

A structured 90-day roadmap can help organisations transition to this model:

- First 30 days: Identify key services and define contracts.

- Next 30 days: Build a minimal runtime with necessary controls.

- Final 30 days: Publish a detailed service catalogue.

This phased approach helps avoid the pitfalls that lead to project cancellations - predictions suggest that over 40% of agentic AI projects may fail by 2027 due to rising costs and poor risk management. However, robust deployment alone isn’t enough; it must be paired with strong governance to ensure responsible and compliant AI operations.

AI Governance Frameworks for Responsible Deployment

As AI infrastructure grows, governance becomes the backbone of ensuring that AI delivers value responsibly. A well-structured governance framework aligns AI efforts with organisational values, customer expectations, and legal standards, while addressing risks such as model failures, regulatory challenges, and competitive vulnerabilities [1,37].

A comprehensive governance strategy tackles three levels of risk:

- Tactical risks: Issues like model errors and technical bias.

- Strategic risks: Concerns such as vendor dependency and potential leaks of competitive intelligence.

- Systemic risks: Broader challenges, including regulatory changes.

Rather than relying on static pre-deployment checklists, organisations should adopt dynamic, automated systems that continuously monitor technical performance and business outcomes.

Key steps for effective governance include:

- Forming a cross-functional committee with representatives from legal, IT, HR, compliance, and management to oversee deployment and auditing.

- Setting up environment gates to ensure AI models meet specific criteria - such as data readiness, privacy compliance, and operational objectives - before moving to production.

- Equipping on-call teams with emergency kill switches to disable systems if safety or policy violations arise.

Additionally, maintaining a centralised registry for model versions, policy updates, and decision logs is critical for meeting legal and regulatory requirements. As Fisher Phillips puts it:

"Governance is about building a process, following the process, and documenting the process".

Without proper documentation, actions taken are effectively invisible from a compliance perspective.

Organisations that integrate MLOps and data governance into their workflows can significantly reduce the time it takes to move models into production - by up to 40%. Strong governance not only safeguards compliance but also preserves the strategic learning loop, where usage data improves models over time. This ensures AI investments yield lasting business value instead of becoming short-lived experiments.

Practical Roadmap to Scaling AI

Scaling AI from an experimental phase to a fully integrated enterprise solution demands a structured, phased approach. Success hinges on building momentum through clearly defined and achievable steps.

Short-Term: Early Wins to Build Momentum

The first 30 days are all about setting a solid foundation and demonstrating immediate value. Start by identifying one high-impact use case that aligns with a measurable business metric, such as reducing cycle time, lowering costs, or improving customer satisfaction. Evaluate the feasibility, potential ROI, and any regulatory risks involved.

Run this use case alongside existing workflows to assess its impact. Take Guardian Life Insurance Company of America as an example: they piloted an automation tool for their request-for-proposal (RFP) process, cutting response times from 5–7 days down to just 24 hours. They plan to scale this initiative further in 2026.

At this stage, set up a governance committee that includes legal, IT, HR, and compliance teams. This group is responsible for implementing safety protocols, defining operations AI should never handle, and establishing rollback mechanisms for emergencies. Focus on smaller, practical wins - like automating ticket categorisation or pre-filling forms - because these incremental improvements build trust and confidence among stakeholders.

One critical insight: 95% of generative AI pilots in 2025 failed to impact profit and loss because they were treated as standard software projects rather than transformative operational tools. By avoiding this mistake, you set the stage for deeper AI integration.

Mid-Term: Integrating AI into Core Operations

Between days 31 and 60, the goal shifts to embedding AI into core workflows. Transition to "human-in-the-loop" systems, where AI outputs are reviewed and approved by humans before reaching customers. This approach balances efficiency with quality assurance.

Your architecture must handle 10 times the expected production volume during load testing. Automate data pipelines and adopt MLOps practices to accelerate production timelines by 40%. At this point, decide whether to use retrieval-augmented generation (RAG) or fine-tuning, depending on your needs. RAG, for instance, is ideal for frequently updated knowledge bases, costing around €38 per 1,000 queries, while fine-tuning works better for repetitive, high-volume tasks at about €19 per 1,000 queries.

Cost efficiency can also be achieved through model cascading. Route simpler queries to cost-effective models, like Mistral 7B, and reserve premium models like GPT-4 for complex tasks. This method can slash costs by up to 87%. Air India is a prime example: their AI virtual assistant automates 97% of over 4 million annual customer queries, saving millions in support costs.

With these systems in place, the focus turns to scaling AI across the organisation.

Long-Term: Enterprise-Wide AI Transformation

The final phase ensures AI becomes a central part of your operations. Successful pilots should be converted into reusable playbooks that other teams can replicate. Adopt a "hub-and-spoke" model, where a central AI team provides infrastructure and standards, while domain-specific teams develop tailored solutions.

Move beyond individual tools to deploy "AI Workers" - autonomous systems capable of handling entire processes. For example, instead of an AI that drafts responses, implement one that resolves Tier-1 support tickets from start to finish.

The results of scaling AI effectively are compelling. Companies report three times higher revenue impact and a 30% boost in EBIT. Microsoft, for instance, saved approximately €470 million in its call centre operations in 2025, while Lumen Technologies projected annual savings of €47 million through disciplined AI execution.

However, the true value of AI lies in its integration with people, processes, and organisational change - accounting for 70% of its impact. Only 30% depends on the technology itself. To sustain this transformation, launch an internal AI academy to provide role-specific training and set up continuous optimisation loops. These loops refine models over time based on usage data, ensuring that AI investments deliver ongoing value rather than becoming fleeting experiments.

"The gap between these outcomes isn't technical sophistication. It's execution discipline." – Likhon, Gen AI Specialist

Conclusion: From Pilot to Enterprise Success

Breaking free from the trap of endless pilot projects isn’t about chasing the next big algorithm or the newest AI model. Instead, it’s about disciplined execution. Organisations that successfully scale AI have three key strengths: clear strategic alignment between leadership and frontline teams, infrastructure designed for production from the start, and a commitment to change management that directly addresses human challenges. These strengths, outlined throughout this guide, are the foundation for turning experimental pilots into meaningful and lasting business results.

When these strategies come together, moving from pilot to production becomes achievable. The numbers speak for themselves: scaling AI can deliver triple the revenue impact and boost EBIT by 30%. Yet, the reality is stark - 70–90% of AI initiatives stall at the experimental stage because success hinges on embedding AI into core workflows and adapting incentive structures to support these changes.

"AI success is 10% algorithms, 20% data and technology, 70% people, processes, and cultural transformation." – BCG

Infrastructure plays a critical role here, especially when combined with governance frameworks that drive progress. Elements like high-quality data pipelines, MLOps practices, and compliance with regulations such as the EU AI Act (set to be fully enforceable by 2 August 2026) are essential building blocks. Beyond the technical foundation, organisations must also foster trust, develop new skill sets, and address the fatigue that often accompanies large-scale transformation efforts. Together, these technical and human elements create a solid base for the cultural and operational shifts required for success.

Events like the RAISE Summit are pivotal in this transformation. Held at the Carrousel du Louvre in Paris, this gathering brings together over 9,000 attendees and 350+ speakers to promote cross-industry collaboration and knowledge sharing. These events provide leaders with the opportunity to benchmark their progress, learn from real-world AI deployments, and build the distributed capabilities necessary to turn isolated pilots into enterprise-wide standards. By moving from siloed experiments to shared learning, organisations can unlock the strategic feedback loops that transform AI’s potential into enduring business value. This seamless blend of technology and human collaboration is the true hallmark of evolving from pilot projects to enterprise success.

FAQs

What steps can companies take to address data quality challenges when scaling AI projects?

To address data quality issues when scaling AI, companies need to treat data as a strategic asset. This means putting in place strong governance frameworks and clearly assigning data ownership. Building reusable data products and designing a reliable data architecture are key steps to ensure consistency and maintain quality across the board.

Another critical factor is improving data readiness. This requires making sure that both structured and unstructured data are accurate, complete, and relevant for AI applications. From the outset, organizations should embed data governance into their operations, incorporating workflows for ongoing validation, cleaning, and updates.

By focusing on these measures, businesses can create a dependable foundation for their AI models, reducing the risk of errors and making it easier to scale from initial pilots to full-scale deployment.

How does MLOps help scale AI projects from pilots to full enterprise adoption?

MLOps (Machine Learning Operations) plays a crucial role in taking AI projects from small-scale pilots to full-scale enterprise deployment. It provides the necessary tools, processes, and governance to ensure AI models are not only deployed successfully but also monitored and maintained over time. By addressing common hurdles like data quality issues, integration challenges, and compliance requirements, MLOps creates a solid foundation for AI initiatives.

Through automation of workflows, lifecycle management for models, and standardised operations, MLOps reduces the need for manual intervention and lowers potential risks. This shift allows AI to evolve from isolated experiments into scalable solutions that integrate effortlessly into a company’s core operations, delivering measurable results. By adopting MLOps, organisations can align their AI efforts with broader business goals, setting the stage for long-term success.

Why is it important for leadership to align on AI initiatives in enterprises?

Getting leadership on the same page is key when it comes to successfully bringing AI into an enterprise. Why? Because it creates a shared vision and sets clear priorities. When leaders are aligned, they can highlight AI’s strategic role, rally the organization behind it, and ensure resources are allocated to scale AI efforts effectively. This alignment stops AI projects from stalling in the "trial and error" phase and helps weave them into the company's core operations.

Leadership alignment also plays a big role in managing change. It helps foster a work culture that welcomes fresh ideas and teamwork. Instead of treating AI as a short-term experiment, aligned leaders position it as a long-term investment. This shift in mindset allows companies to see measurable results and achieve steady growth. Without this level of alignment, AI projects risk falling short or failing to deliver their full potential.