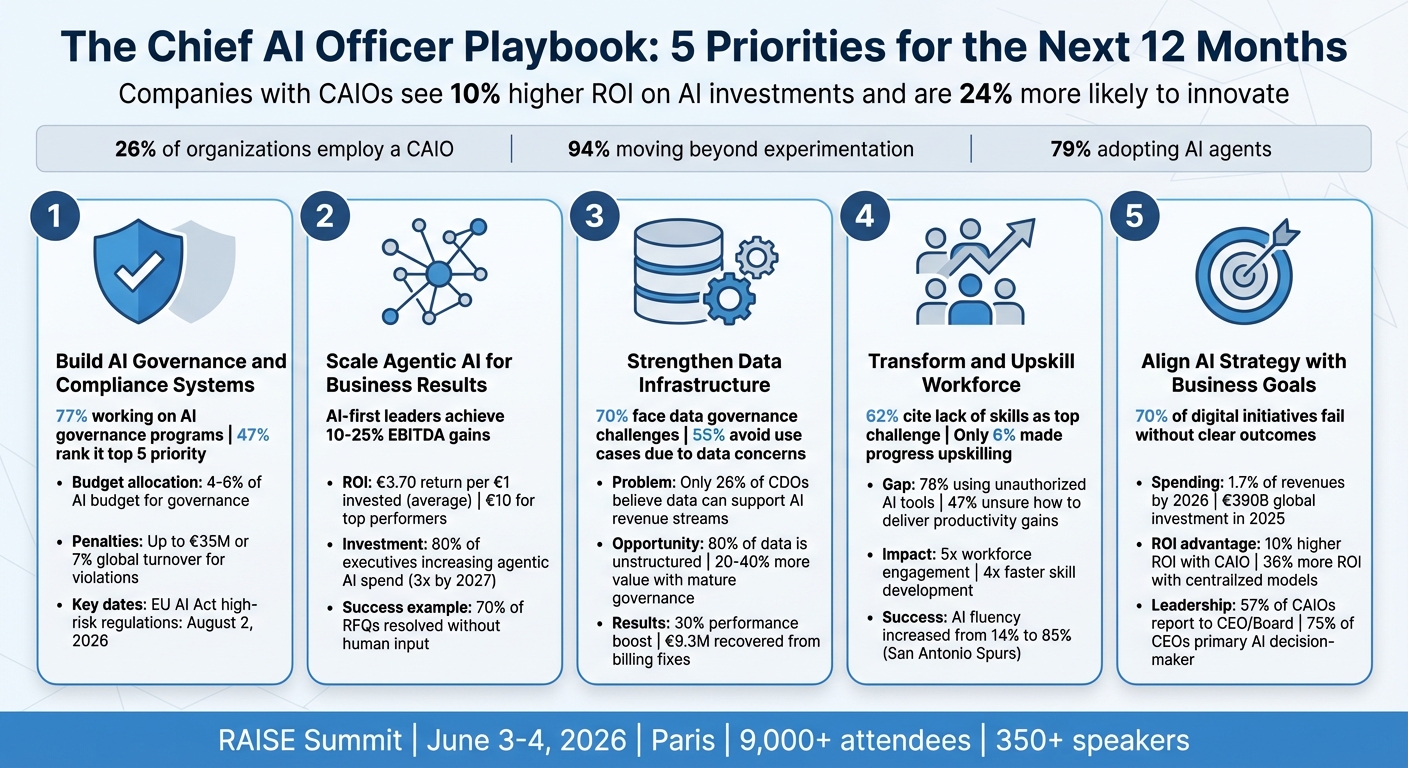

In 2026, the Chief AI Officer (CAIO) role has become essential for companies aiming to succeed with AI. With 26% of organizations now employing a CAIO, businesses with this role see a 10% higher ROI on AI investments and are 24% more likely to innovate. However, the job is demanding - CAIOs must manage complex AI systems, comply with strict regulations like the EU AI Act, and deliver measurable business results.

To thrive, CAIOs should focus on these five priorities:

- Establish AI Governance: Build clear frameworks, automate policy enforcement, and meet regulatory requirements to balance innovation and compliance.

- Scale Agentic AI: Identify high-value use cases, improve AI agent performance, and prove ROI by redesigning workflows and aligning AI with business goals.

- Strengthen Data Infrastructure: Invest in unified data systems, improve data quality, and adopt cloud technologies to support scalable AI solutions.

- Upskill the Workforce: Train employees in AI literacy and integrate AI tools into daily operations to improve productivity and collaboration.

- Align AI Strategy with Business Goals: Create actionable roadmaps, set clear KPIs, and ensure cross-departmental alignment to tie AI efforts directly to organizational success.

The pressure is high, with 94% of companies moving beyond experimentation and 79% adopting AI agents. Effective execution of these priorities will enable businesses to outperform competitors and navigate the fast-evolving AI landscape.

5 Key Priorities for Chief AI Officers in 2026

Leading AI Transformation: A Chief AI Officer's Perspective | Amazon Web Services

Priority 1: Build AI Governance and Compliance Systems

Navigating the complexities of AI deployment requires a solid governance system - one that balances innovation with compliance. By late 2025, 77% of organizations were actively working on AI governance programs, with 47% ranking it among their top five strategic priorities. However, only 35% of C-suite executives could fully explain their AI models to stakeholders, highlighting the need for stronger governance structures.

The foundation of effective governance lies in the "5 Ps" Framework: People (interdisciplinary teams), Priorities (risk triaging), Processes (checks and balances), Platforms (monitoring and documentation), and Progress (maturity metrics). This framework emphasizes practical implementation over theoretical policies. As Soumendra Kumar Sahoo aptly said:

Governance is no longer a 'nice to have'; it is business continuity.

Organizations typically allocate 4% to 6% of their AI budgets to governance. For budgets under €10 million, this translates to 3–4% and about 0.5–1 full-time staff member. Medium-sized programs (€10–100 million) require 4–5% and 2–4 staff, while larger programs (over €100 million) need 5–6% and 6–12 dedicated personnel. Here's how to build a robust governance system that aligns with industry standards.

Create AI Governance Frameworks

Start by conducting a thorough gap analysis, creating an inventory of AI systems, drafting use policies, and rolling out a monitoring proof of concept - all within the first 90 days. Role-based training and an incident response playbook are also essential steps.

Establish two governance layers: an AI Governance Committee for executive oversight and an AI Review Board for operational evaluations. Use tiered approvals to fast-track low-risk AI while requiring board-level sign-off for high-risk systems.

One game-changer is automating policy enforcement. Instead of relying on static PDF policies, integrate governance policies directly into API gateways and CI/CD pipelines. For instance, MediaMarkt implemented automated model cards and a cross-functional review board in July 2025, cutting AI project approval cycles from 12 weeks to just 3 weeks and boosting revenue per user by 14%.

Categorize AI systems by autonomy levels - from A0 (Assist/Read-only) to A5 (Self-direct) - and implement controls accordingly. High-risk autonomous systems must include a "kill switch" capable of achieving a Mean Time to Recovery within 60 seconds. Ensure these frameworks are aligned with stringent EU regulations.

Meet Regulatory Requirements

Prohibitions on banned AI practices became effective on 2nd February 2025, with General-Purpose AI model rules following on 2nd August 2025. High-risk regulations will come into force on 2nd August 2026. As the European Commission stated:

There is no stop the clock. There is no grace period. There is no pause.

Penalties for violations are steep - up to €35 million or 7% of global annual turnover. For example, Block Inc. (Cash App) faced a €75 million fine in January 2025 for inadequate automated Anti-Money Laundering controls.

Start by building a comprehensive AI system inventory. Include in-house models, vendor SaaS with embedded AI, and experimental pilots. Document the system owner, purpose, data sources, and affected groups. Determine whether you're a Provider (developing or branding AI) or a Deployer (using AI in business processes), as obligations differ significantly.

Classify AI systems into four tiers:

- Unacceptable: Banned practices

- High-risk: Strictly regulated systems

- Transparency-risk: Requires disclosure

- Minimal/No risk: Low regulatory concerns

High-risk systems - such as those used in biometrics, critical infrastructure, education, employment, or essential services - must undergo conformity assessments and secure CE marking before market placement. Align these efforts with GDPR compliance, which includes data protection impact assessments and lawful data processing requirements. In France, the CNIL often oversees AI systems impacting fundamental rights and data protection.

Set Up Risk Assessment and Audit Processes

Effective governance requires both regulatory compliance and proactive risk management. Use the NIST AI Risk Management Framework's four core functions:

- Govern: Establish culture and structure

- Map: Define context and use cases

- Measure: Assess and benchmark risks

- Manage: Prioritize and mitigate risks

For high-risk systems, conduct thorough assessments, including:

- Data Protection Impact Assessments

- Fundamental Rights Impact Assessments

- AI Impact Assessments

Pre-deployment validation should include bias testing across demographics, safety testing for edge cases, and security assessments. Red-teaming can help stress-test systems for misuse and robustness. Assign roles using a RACI matrix: Legal/Privacy for impact reviews, Security for safeguards, and ML teams for inventory and drift checks.

Keep audit-ready documentation, such as model inventories, model cards detailing purpose and limitations, and audit trails capturing inputs, outputs, and decision overrides. Shift from annual audits to continuous compliance monitoring, utilizing "evidence vaults" for real-time readiness.

Finally, hold vendors accountable. Include AI-specific clauses in contracts, covering audit rights, bias testing cooperation, and indemnification for AI-related harm. Procurement teams increasingly demand transparency on training-data origins and audit capabilities.

Priority 2: Scale Agentic AI for Business Results

Once strong governance is in place, the next step is scaling agentic AI to deliver measurable business outcomes. While 95% of organisations report no return on investment (ROI) from generative AI, a small group of "AI-first" leaders are achieving EBITDA gains of 10–25%. The key difference? It’s not the technology itself, but how these leaders identify, implement, and scale agentic AI solutions. With 80% of executives planning to increase investments in agentic AI - and spending projected to triple by 2027 - the opportunity to gain an edge is shrinking. On average, AI delivers €3.70 for every €1 invested, but top-performing organisations see returns as high as €10. Here’s how to join their ranks.

Select High-Value Use Cases

Rather than focusing on automating isolated tasks, aim to redesign entire processes for collaboration between multiple AI agents. High-value opportunities often fall into six categories: Content Creation, Research, Coding, Data Analysis, Ideation/Strategy, and Automations. As Erik Brynjolfsson of Stanford University notes:

This is a time when you should be getting benefits [from AI] and hope that your competitors are just playing around and experimenting.

To prioritise effectively, use the Impact/Effort Framework. This approach categorises use cases into four quadrants: High ROI Focus (quick wins to tackle first), Self-service (tools for individual productivity), High-value/High-effort (long-term transformational projects), and High-effort/Low-impact (not worth pursuing). Pay particular attention to back-office functions like finance, procurement, and risk management, where agentic AI can replace costly outsourcing.

Take Promega, for instance. This life sciences company used ChatGPT Enterprise to draft email campaigns and briefs, saving 135 hours in just six months. Marketing Strategist Kari Siegenthaler spearheaded the initiative, enabling the team to focus on higher-level strategy. Similarly, Lockheed Martin partnered with IBM to simplify its data and AI tools by 50% and automated 216 data catalogue definitions, allowing 10,000 engineers to build large-scale agentic solutions quickly.

When evaluating use cases, consider eight dimensions: business value, use case clarity, data readiness, technology setup, talent availability, governance, change management, and partner ecosystems. Start by breaking down current processes to understand task durations and costs before deploying AI agents. Assign accountability to General Managers with clear ROI goals for AI projects, rather than leaving these initiatives solely in the hands of IT teams. Once high-value opportunities are identified, the next focus should be on optimising agent performance.

Improve AI Agent Performance

To enhance reliability, use a modular orchestration structure with distinct roles: Router (intent classification), Planner (task sequencing), Knowledge (retrieval), Tool Executor (action-taking), Supervisor (guardrails), and Critic (quality checks). This setup simplifies debugging and refinement.

Implement a Graduated Autonomy Framework with four levels: Tier 1 (Shadow Mode/Assisted), Tier 2 (Supervised/Human-in-the-loop), Tier 3 (Guided/Human-on-the-loop), and Tier 4 (Full Autonomy). This approach tackles what BCG calls "the trust deficit":

The greatest barrier to scaling agentic AI isn't technology, it's trust.

Each agent should have a Business Context Fabric that includes Objectives (focused on outcomes), Resources (data and tools), and Constraints (guardrails and brand tone). For better precision and speed, use workflow-specific Small Language Models (SLMs) instead of relying solely on large, generic models. Organisations that excel in areas like cybersecurity, ethics, and SLM adoption are 32 times more likely to achieve top-tier results.

For example, a global industrial goods company reimagined its quote-to-order process in early 2026, deploying four specialised agents (Assessment, Recording, Status, Lead-Time). This system now resolves 70% of RFQs without human input, cutting labour costs by 30–40% and generating millions in extra revenue. Similarly, a global shipbuilder reduced engineering efforts by 40% and design lead times by 60% by implementing agents for a multi-step design process in late 2025.

To measure success, go beyond productivity metrics. Track agent-to-human handoff rates, reasoning coherence scores, and decision accuracy. Develop a "Digital Employee Handbook" that outlines rules for AI agents, including data access permissions and defined roles in decision-making. Use "Policy-as-Code" to embed these rules directly into the system, enabling the Supervisor agent to enforce policies like redaction and human-in-the-loop thresholds at runtime.

Prove ROI and Address Scaling Obstacles

Profitability hinges on reducing agent time, computational costs, and human supervision by leveraging memory and specialisation. Follow the 10/20/70 Rule: allocate 10% of effort to algorithms, 20% to technology and data, and 70% to redesigning processes and workflows. Michał Iwanowski, VP of Technology & AI Advisory at deepsense.ai, explains:

Strategic alignment means moving ROI targets from the innovation lab to the General Manager's desk. Real value is captured in the P&L through redesigned workflows, not just through isolated technical pilots.

Avoid premature ROI evaluations - the full benefits of agentic AI often take at least 14 months to materialise. Use a 90-Day Plan to guide implementation: Days 0–30 (prove the concept with one use case), Days 31–60 (add actions and human-in-the-loop), Days 61–90 (productise and create templates for self-service).

Shift from standalone assistants to centralised agentic platforms with shared memory, orchestration, and governance. This transition can cut costs by up to 30% and boost productivity by 25%. Don’t wait for perfect data; systems using Retrieval-Augmented Generation (RAG) can often work well even with semi-structured or decentralised data.

Finally, weigh the costs of building versus buying. Vendor platforms with prebuilt agent capabilities can cost up to €1.5 million annually per use case - around three times the cost of an in-house solution. Design systems with modularity to avoid vendor lock-in, enabling quick swaps of language models or orchestration layers as technology evolves. With 78% of C-suite executives believing that fully leveraging agentic AI requires a new operating model, those who rethink processes from the ground up will see the greatest rewards. Overcoming these challenges is critical for CAIOs to drive meaningful business impact and solidify their strategic role.

Priority 3: Strengthen Data Management and Infrastructure

For agentic AI to thrive, it needs solid data foundations. Yet, 70% of organizations adopting generative AI face challenges with data governance and integration, and 55% avoid certain use cases entirely due to concerns about data readiness. The numbers are telling: only 26% of Chief Data Officers worldwide believe their current data can support new AI-driven revenue streams. As Ed Lovely, Vice President and Chief Data Officer at IBM, aptly states:

When you think about scaling AI, data is the foundation.

The problem isn’t just about having enough data - it’s about fragmented, inconsistent, and inaccessible data. Over 80% of an organization’s data is unstructured (think videos, images, and text), yet 80% of companies that aren’t high performers struggle to organize it effectively. On the other hand, organizations with mature AI governance extract 20–40% more value from their models. So, how do you build infrastructure that works?

Build AI-Ready Data Systems

To create a strong foundation, shift from siloed data strategies to an integrated architecture that connects all data formats into a unified system. This setup should include data lakehouses for flexible storage, vector databases for managing unstructured data, and scalable object storage to handle high-volume, multimodal AI workloads.

One effective strategy is adopting a Medallion Architecture, which organizes data into three layers:

- Bronze: Raw, ingested data.

- Silver: Validated, processed data.

- Gold: Business-ready datasets optimized for AI.

Focusing on Gold-layer datasets for key areas like finance or supply chain ensures AI agents deliver accurate and consistent insights.

Real-world examples highlight the impact of this approach. In 2025, Ford Motor Company migrated much of its database infrastructure to Google Cloud managed services, achieving a 30% performance boost and freeing up significant team resources. Similarly, a North American utility company improved efficiency by 20–25% in its first year and recovered around €9.3 million by addressing billing discrepancies through data maturity mapping and lineage pilots.

A modular tech stack is key to staying agile. This setup allows updates to rapidly evolving components like large language model (LLM) hosting without disrupting more stable elements like cloud infrastructure. AI agents can further enhance efficiency by automating tasks such as query writing, data migration, transformation, and metadata tagging. Treat unstructured data as a priority asset, ensuring it’s tagged and stored in searchable formats like vector databases.

With this foundation in place, the next step is rigorous governance and quality control.

Improve Data Governance and Quality

AI systems can turn small data issues into significant business risks by generating confident but flawed outputs. To mitigate this, organizations should adopt a Five-Pillar AI Data Quality Framework:

- Semantic Alignment: Use a standardized glossary for consistent terminology.

- Context Coherence: Deduplicate and time-scope data collections with clear ownership.

- Bias and Coverage: Evaluate data representativeness before training.

- Provenance and Lineage: Ensure outputs can be traced back to their source.

- Feedback and Recovery: Allow users to flag issues and trigger data corrections.

Establishing data stewardship is also crucial. Here, business units define data quality standards while a central team handles interoperability and security. Treat data as a reusable product - designed for specific purposes and easily accessible across the organization. Tools for data observability can monitor lineage, detect anomalies, and address quality issues in real time.

A 90-Day Operating Model can help kickstart these efforts:

- Days 1–30: Identify eligible data sources, prioritize fields for key use cases, and assign data owners.

- Days 31–60: Develop scorecards for data quality and introduce mechanisms to flag issues.

- Days 61–90: Implement pre-ingestion checks to catch stale or biased data and publish enriched datasets with traceable lineage.

Standardized documentation, such as "Model Cards" and "Data Sheets", can further enhance governance by detailing the purpose, limitations, metrics, and privacy considerations of AI systems. This level of detail is increasingly critical, especially with regulations like the EU AI Act, which can impose penalties of up to 7% of global turnover for non-compliance.

Use Cloud Architectures for Data Access

Cloud infrastructure can make data more accessible and scalable for AI - if designed with governance in mind. Use the Model Context Protocol (MCP) to provide AI agents with secure, protocol-level data access while maintaining identity, policy, and audit controls. Each tool call should be authenticated to enforce permissions.

Enhance traditional Retrieval-Augmented Generation with agentic retrieval, which combines reasoning and multi-source synthesis to deliver just-in-time context while respecting existing permissions. This allows AI agents to interact with enterprise data using natural language, making insights more accessible to non-technical users.

Consider neoclouds - specialized cloud providers tailored for AI workloads. These platforms offer high-density GPU access, liquid cooling (up to 600 kilowatts per rack), and modular scalability. They also simplify pricing with per-GPU, per-hour models, avoiding the complexity of traditional cloud services. Choose data center locations carefully to meet local regulatory and data sovereignty requirements.

Adopting a federated governance model can further balance control and flexibility. In this setup, business-aligned data stewards manage the meaning and quality of data, while a central team oversees security and interoperability. This approach ensures data strategies align with both organizational goals and compliance needs.

As Ram Katuri, Senior Principal at Google Cloud, emphasizes:

If your data isn't ready for AI, neither is your business.

sbb-itb-e314c3b

Priority 4: Transform and Upskill Your Workforce

The biggest obstacle to scaling AI isn’t technology - it’s talent. A striking 62% of C-suite executives identify a lack of skills as their top challenge, yet only 6% of organisations have made meaningful strides in upskilling their teams. While 80% of leaders regularly use AI tools, nearly half of employees (47%) feel unsure about how to deliver the productivity gains expected of them. Even more concerning, 78% of knowledge workers are resorting to "Bring Your Own AI" tools without proper oversight.

Addressing this doesn’t just call for training - it requires a complete transformation. Companies that foster effective human-AI collaboration see workforce engagement increase fivefold and skill development accelerate four times faster. As Dr. Jules White from Vanderbilt University puts it:

AI is no longer an IT initiative... It is now a strategic workforce capability - a new category of labour that every employee can deploy through conversation, creativity, and problem-solving.

Prepare for the Future of Work

Building teams that integrate human and AI capabilities requires rethinking workflows from the ground up. Start by auditing existing processes to align repetitive tasks with AI's strengths. Training should be tailored to four distinct groups: executives, managers, team leads, and daily users. Each group has unique needs - executives focus on strategy and leadership, while daily users benefit from hands-on instruction in areas like prompt engineering.

For example, in 2024, CMA CGM's CEO Rodolphe Saadé spearheaded an AI skills accelerator programme by personally engaging with senior managers and visiting training facilities, creating a culture of continuous learning. Giving employees the time and freedom to experiment with AI - without fear of failure or job displacement - is crucial. Brad Strock, Executive Coach and former CIO of PayPal, highlights this point:

The real challenge with AI isn't the technology - it's getting people to trust it. If you don't build trust first, no AI initiative will succeed.

One European retail bank demonstrated this by embedding an "Ops AI Agent" into their lending operations. This automated document validation, cutting approval times from several days to under 30 minutes and boosting productivity by 50%. Such efforts empower Chief AI Officers to drive meaningful change across their organisations.

Launch AI Literacy Programmes

Effective upskilling follows a three-phase approach: Foundational (introducing core concepts), Applied (hands-on practice), and Embedded (integrating AI into daily tasks). Instead of focusing solely on technical skills like coding, organisations should promote AI fluency - the ability to identify impactful use cases, select the right tools, and apply sound judgment.

Take Workday’s "EverydayAI" initiative in 2025, which upskilled nearly 20,000 employees by dedicating "experimentation time" informed by people analytics. Similarly, the San Antonio Spurs increased AI fluency from 14% to 85% by embedding training into daily workflows. Peer-to-peer learning can amplify these efforts; for instance, The Estée Lauder Companies created a "GPT Lab" where over 1,000 employee ideas were collected, leading to prototypes and scalable solutions.

To measure success, use the Kirkpatrick method. This framework evaluates outcomes from learner satisfaction to competency gains, productivity improvements, and overall business impact. Setting clear adoption goals is also essential. Moderna’s CEO, for example, encouraged employees to use ChatGPT 20 times daily, embedding it as a core tool. Once internal upskilling is underway, external networking can further enhance these efforts.

Use Networking Opportunities

Networking builds on internal progress by offering fresh insights and peer comparisons. Industry events like the RAISE Summit allow AI leaders to share experiences, discover new tools, and foster connections. These gatherings are invaluable for learning from others facing similar challenges and for accessing strategies that have already been tested. Although 84% of executives predict regular human-AI collaboration by 2028, only 26% of employees have received training to work effectively alongside AI. Networking with other Chief AI Officers helps identify gaps, benchmark progress, and adopt proven frameworks to accelerate workforce transformation while avoiding common missteps.

Priority 5: Align AI Strategy with Business Goals

Even the most advanced AI initiatives can fall short without a clear connection to business outcomes. A staggering 70% of digital initiatives fail to deliver value because leaders often see transformation as a one-time goal rather than an ongoing process of adjustment and improvement. With AI spending projected to account for 1.7% of total revenues by 2026, Chief AI Officers (CAIOs) must ensure every euro invested is tied to measurable business results.

By building on strong governance and scalable technology, cross-functional teams can work together to ensure AI efforts lead to tangible outcomes. As Toby Bowers, Vice President of Commercial Cloud & AI Marketing at Microsoft, puts it:

Success looks different from one organisation to the next - and what it looks like may change over time depending on your unique business challenges and objectives.

This approach means shifting away from "tool sprawl" - buying individual solutions without a clear return on investment (ROI) - and instead focusing on initiatives that deliver end-to-end results. The difference lies in how CAIOs approach planning, budgeting, and engaging stakeholders.

Create AI Roadmaps and Budgets

A structured roadmap can guide AI efforts effectively. Consider a three-horizon model:

- Horizon 1 (0–6 months): Focus on quick wins and foundational projects.

- Horizon 2 (6–18 months): Scale predictive intelligence solutions.

- Horizon 3 (18+ months): Develop and implement autonomous systems.

Rather than stretching resources too thin, use a 30-60-90 day framework to turn strategy into action. Start by aligning your portfolio and selecting 2–3 proof points in the first 30 days - ideally one aimed at efficiency and another at growth. During days 31–60, launch pilots and track KPIs weekly. By days 61–90, make decisions on whether to continue or pivot, and secure funding for the next phase. As Ameya Deshmukh from EverWorker explains:

Most companies don't fail at AI because they lack ideas. They fail because they lack sequencing.

Collaborate with the finance department to create an "AI Value Ledger" that tracks key metrics like time saved, revenue gains, and risk reduction before launching pilots. This ensures transparent and trusted ROI reporting. Organisations that prioritise thorough data preparation can cut AI implementation timelines by up to 40%.

To reduce risks and speed up adoption, integrate AI capabilities into existing systems using APIs and microservices. Successful pilots often deliver measurable results within 3–4 months and involve small, focused teams of 4 to 6 people.

With a roadmap and budget in place, the next step is aligning stakeholders across the organisation to ensure a unified vision.

Align Stakeholders Across the Organisation

Alignment across departments is critical to scaling AI initiatives. Companies with a Chief AI Officer see a 10% higher ROI on AI investments, and those using centralised or hub-and-spoke operating models achieve up to 36% more ROI compared to decentralised setups. Notably, 57% of CAIOs report directly to the CEO or Board, highlighting the strategic importance of this role.

To streamline collaboration, establish a cross-functional AI Council with executive sponsors from Legal, HR, Finance, and Operations. This council should have the authority to resolve issues, unblock projects, and fast-track approvals for initiatives with high potential. For instance, BBVA created a central AI network to evaluate ideas, prioritise high-value use cases, and facilitate smooth collaboration, enabling faster transitions from proof-of-concept to production.

The CAIO should act as a performance monitor, ensuring AI efforts remain aligned with business goals. Regular 30-minute C-suite updates - sharing "1 win, 1 warning, and 1 wildcard" - can maintain executive engagement without overwhelming leaders. These updates help build fluency among decision-makers and keep AI on the boardroom agenda.

Set company-wide adoption goals and incorporate them into departmental KPIs. Encourage senior executives to lead by example. For instance, Sarah Friar, CFO at OpenAI, frequently shares how she uses ChatGPT in her daily work and motivates her team to experiment, making OpenAI one of the most advanced adopters of AI.

To balance speed and oversight, implement a tiered risk governance model. Low-risk projects can proceed quickly with pre-approved guidelines, while high-risk initiatives undergo structured reviews. This approach avoids unnecessary bureaucracy while maintaining responsible oversight. It’s worth noting that while 63% of companies consider generative AI a high priority, 91% feel unprepared to implement it responsibly.

Learn from Industry Events

Industry events offer invaluable opportunities for CAIOs to refine their strategies. With nearly 75% of CEOs now identifying as their company's primary AI decision-maker, these gatherings provide direct access to global leaders, proven frameworks, and peer insights that can save months of independent effort.

The RAISE Summit, taking place on 3–4 June 2026 at the Carrousel du Louvre in Paris, is a prime example. This event will host over 9,000 attendees, 2,000+ companies, and 350+ speakers. It features sessions on AI strategy, industry-specific tracks (e.g., healthcare, cybersecurity, finance), and networking opportunities tailored for senior AI leaders. Attending such events allows CAIOs to explore emerging tools, validate their roadmaps against industry benchmarks, and build connections that support long-term success.

As global AI investment is projected to reach €390 billion in 2025, with a 19% increase expected in 2026, learning from those who have successfully navigated similar challenges is essential for maximising returns.

Conclusion: CAIO Success in the Next 12 Months

The road ahead for Chief AI Officers in 2026 is both clear and demanding. To succeed, they’ll need to focus on five interconnected priorities: robust governance, scalable agentic AI, strong data infrastructure, workforce transformation, and clear business alignment. These aren’t standalone objectives; together, they form a strategy where each piece strengthens the others. This integrated approach ensures that technical rollouts, compliance efforts, and organizational changes align seamlessly.

The pressure is on. CAIOs must deliver measurable ROI quickly to keep their organizations ahead of the curve. Companies that succeed will be those that escape "pilot purgatory" and fully scale their AI initiatives.

With 94% of companies moving beyond basic experimentation and 79% already adopting AI agents, the competition is intensifying. The focus now is on tying AI efforts directly to business outcomes. As PwC highlights:

The companies that grasp not just what AI can do but continually reassess strategy and evolve what that means to their business will lead in the age of AI.

In this fast-changing environment, staying informed is non-negotiable. Events like the RAISE Summit in Paris offer invaluable opportunities to refine strategies and build critical connections. With over 9,000 attendees and 350+ speakers, such gatherings can accelerate progress and provide insights that might otherwise take months to uncover.

FAQs

What are the main responsibilities of a Chief AI Officer in 2026?

In 2026, the role of a Chief AI Officer (CAIO) is pivotal in ensuring that AI initiatives are tightly aligned with an organisation's business goals and deliver tangible results. Their duties span a wide range of responsibilities, from integrating AI into everyday operations to fostering an environment where AI adoption thrives. They focus on identifying and prioritising impactful use cases that can generate the highest returns.

A CAIO also plays a key role in maintaining ethical standards and managing risks to ensure AI is used responsibly and complies with regulations. Beyond governance, they lead organisational transformation by enhancing AI literacy and equipping teams with the skills to leverage AI tools effectively. As AI continues to drive innovation and provide a competitive edge, CAIOs must stay ahead of emerging trends and scale AI solutions to support long-term growth.

How can businesses align their AI strategies with overall goals effectively?

To make sure AI strategies truly align with business objectives, companies need to connect their AI efforts directly to their larger goals. This means prioritizing high-impact use cases that can deliver measurable results, setting clear KPIs to track progress, and regularly monitoring ROI to ensure these initiatives contribute to long-term growth and efficiency.

Equally important is creating a shared vision for AI across the organisation. This requires strong leadership support, clear communication about how AI fits into the company’s strategic goals, and encouraging collaboration among departments. When AI is seamlessly integrated into the overall business strategy, it can fuel innovation, provide a competitive advantage, and transform AI investments into real, measurable outcomes.

How can organisations ensure effective AI governance and compliance?

To manage AI governance and compliance effectively, organisations need to establish specific policies and standards that ensure AI projects align with legal, ethical, and regulatory expectations. This involves setting clear guidelines for how AI is developed and used, performing thorough risk assessments, and setting up strong monitoring systems to track AI performance and adherence to compliance requirements.

Incorporating regulatory requirements into everyday operations is equally important. This can be achieved through practical measures like regular data audits, clear accountability structures, and routine compliance checks. Promoting AI literacy within teams and appointing dedicated personnel for AI oversight can also enhance compliance efforts and help organisations stay ahead of shifting regulatory landscapes.