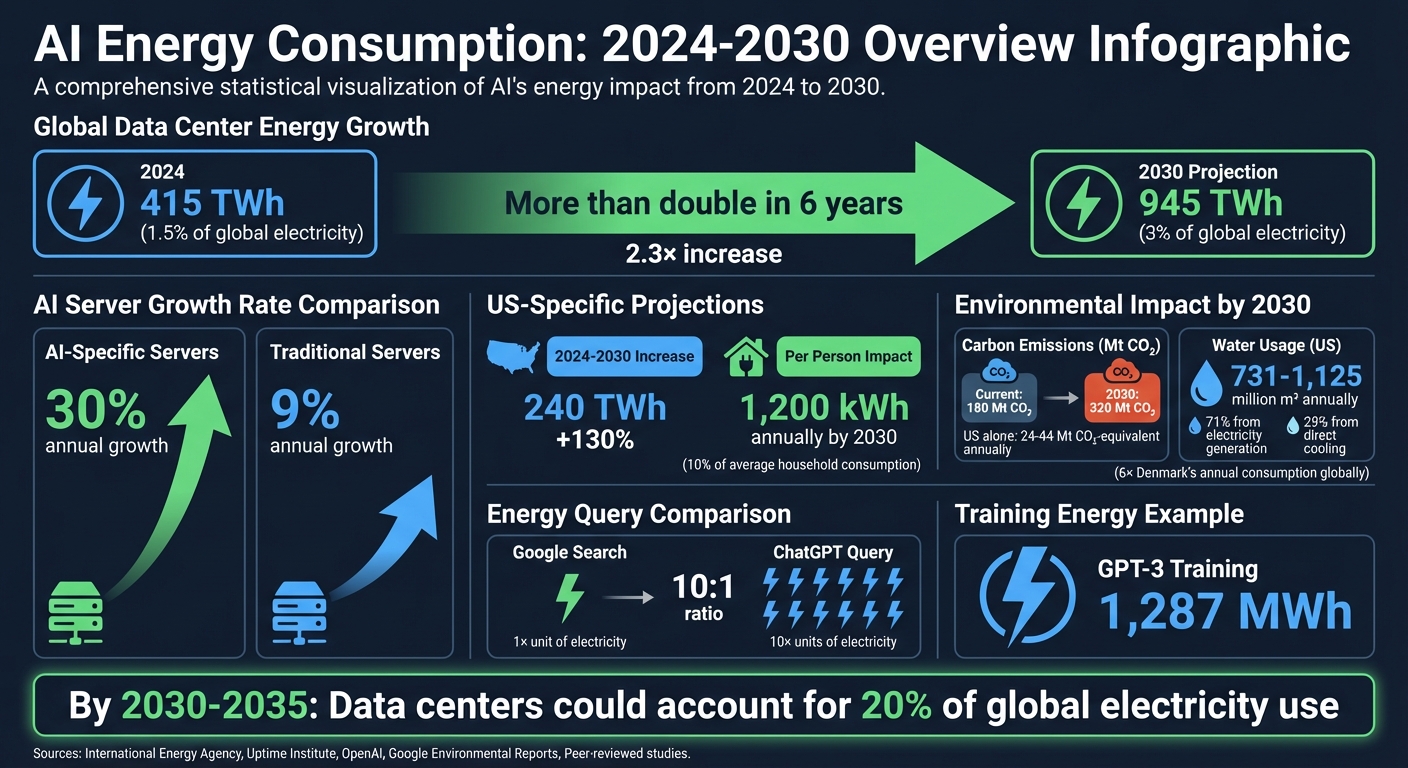

AI's rapid growth brings a massive energy challenge. By 2030, data centres could consume 945 TWh annually - more than double 2024 levels - accounting for 3% of global electricity use. Training and running AI models require immense power, with a single ChatGPT query using 10× the electricity of a Google search. Rising carbon emissions, water usage, and electronic waste further compound the issue.

Key insights:

- Energy Use: AI-specific servers grow energy demand by 30% annually, far outpacing traditional servers.

- Environmental Costs: AI data centres may emit 320 Mt CO₂ by 2030 and use water equivalent to six times Denmark's annual consumption.

- Hardware Waste: Short GPU lifespans lead to significant electronic waste.

Solutions include advanced hardware like photonic chips, smaller AI models, and renewable energy adoption. Companies like Microsoft and Apple are already implementing energy-efficient practices. However, without major upgrades to energy infrastructure and transparent energy tracking, AI's future growth could face serious constraints.

AI Energy Consumption Statistics and Projections 2024-2030

Understanding AI's Energy Consumption Problem

Current and Projected Energy Use in AI Systems

In 2024, data centres worldwide consumed a staggering 415 TWh, accounting for 1.5% of global electricity usage. By 2030, this number is expected to climb to 945 TWh, doubling its share to 3% of global consumption. What's driving this surge? Accelerated servers built specifically for AI workloads are seeing a 30% annual growth in electricity use, far outpacing the 9% growth rate of traditional servers.

Zooming in on the United States, the numbers are even more striking. By 2030, data centre electricity use in the US alone is projected to rise by 240 TWh - a 130% increase from 2024 levels. To put it in perspective, this translates to about 1,200 kWh per person annually, which is roughly 10% of the average American household's yearly electricity consumption. Together, the US and China are expected to dominate, accounting for nearly 80% of global data centre expansion by 2030.

Mahmut Kandemir, a Computer Science and Engineering professor at Penn State, sheds light on the scale of the issue:

"Training these models involves thousands of graphics processing units (GPUs) running continuously for months, leading to high electricity consumption. By 2030–2035, data centres could account for 20% of global electricity use."

This skyrocketing demand for energy sets the stage for a broader discussion on environmental impacts.

Carbon Emissions, Water Use, and Electronic Waste

Energy consumption is only one piece of the puzzle. Data centres are also significant contributors to carbon emissions, water use, and electronic waste.

Currently, data centres are responsible for around 180 Mt of indirect CO₂ emissions, about 0.5% of global combustion emissions. By 2030, these emissions are expected to rise to 320 Mt CO₂. In the US alone, AI server deployment could generate between 24 and 44 Mt of CO₂-equivalent emissions annually by 2030.

Water usage is another growing concern. AI data centres rely heavily on water for cooling, with the US projected to use between 731 and 1,125 million m³ annually by 2030. Interestingly, 71% of this water footprint comes indirectly from electricity generation, while the remaining 29% is tied to direct cooling needs.

Then there’s the issue of electronic waste. The short lifespan of GPUs and other high-performance components means that outdated or damaged hardware is discarded at an alarming rate. Kandemir highlights the problem:

"The short lifespan of GPUs and other HPC components results in a growing problem of electronic waste, as obsolete or damaged hardware is frequently discarded."

Manufacturing these components requires rare earth minerals, a process that not only depletes natural resources but also contributes to environmental degradation. The constant cycle of upgrades and disposals adds to the strain on waste management systems and natural resources.

These environmental challenges underscore the urgency of addressing energy constraints.

How Energy Constraints Could Limit AI Development

Energy availability is quickly becoming a bottleneck for AI growth. The infrastructure needed to power data centres simply isn’t keeping up with demand. While it can take years to plan and build energy infrastructure, data centres can be operational in just 2–3 years. In Northern Virginia, a major hub for data centres, new facilities are facing grid connection delays of up to seven years. Meanwhile, Dublin has effectively halted new data centre connections due to grid capacity limits.

These delays create major roadblocks for AI development. Concentrating data centres in specific regions leads to localized surges in energy demand, overwhelming transmission and distribution systems. Some operators are attempting to sidestep these delays by co-locating gas-fired power plants with data centres. While this might address immediate power needs, it raises serious concerns about sustainability.

Without significant upgrades to energy infrastructure, these constraints won’t just slow AI’s progress - they’ll also jeopardize global sustainability goals for 2030.

sbb-itb-e314c3b

Why AI Systems Consume So Much Energy

Energy Requirements for Training and Running AI Models

AI systems demand immense energy, mainly due to the computational intensity of both training and running models. Training large-scale AI models involves operating thousands of GPUs non-stop for weeks or even months. These GPUs perform trillions of calculations to fine-tune billions of parameters. For instance, training GPT-3 required a staggering 1,287 MWh of energy.

After training, the energy demand doesn’t drop off. Once deployed, these models handle billions of user interactions through inference. At Google, about 60% of machine learning energy is consumed by inference activities, while training accounts for the remaining 40%. To put this into perspective, in May 2025, Google reported that processing a single Gemini Apps text prompt consumed 0.24 Wh of energy - comparable to watching nine seconds of television. Breaking it down further, each prompt used 0.14 Wh for active accelerators, 0.06 Wh for CPU and memory tasks, and 0.04 Wh for idle capacity and overhead. The idle energy consumption is necessary to ensure systems can manage sudden spikes in traffic.

This relentless energy usage creates a significant hurdle for achieving 2030 sustainability goals. It also underscores inefficiencies that arise during deployment, pushing the need for more efficient infrastructure.

Infrastructure Inefficiencies and Hardware Limitations

The hardware and infrastructure supporting AI systems are major contributors to energy inefficiency. In modern data centres, servers alone account for roughly 60% of electricity consumption, while cooling systems represent another substantial portion. Even in highly efficient hyperscale data centres, cooling can consume about 7% of total energy, but in less optimized enterprise facilities, this figure can exceed 30%.

Jeff Wittich, Chief Product Officer at Ampere Computing, highlights the challenges posed by high-performance AI hardware:

"Data centers must employ immersion cooling... this cooling method consumes additional power, compelling data centers to allocate 10% to 20% of their energy solely for this task."

Traditional hardware design further exacerbates inefficiency. For example, in single-GPU workloads, the surrounding infrastructure - such as multi-socket CPUs, memory channels, and redundant power supplies - often ends up consuming more energy than the GPU itself when other accelerators are idle. A server equipped with 8×A100 GPUs (like the DGX server) can draw between 4.0 and 4.5 kW of power, with much of this energy going towards non-computational components.

Adding to the problem is the short three-year lifespan of high-performance computing components, which leads to significant electronic waste. The embodied carbon from these components can account for 50% to over 80% of their total lifecycle emissions. These inefficiencies not only increase energy consumption but also pose a serious challenge to global sustainability efforts.

How much electricity will AI need?

New Technologies Making AI More Energy-Efficient

Tackling AI's energy challenges requires advancements in both hardware and algorithm design. Here's how cutting-edge developments are making AI systems more efficient.

Advanced Hardware: Neuromorphic Chips and Optical Processors

AI hardware is undergoing rapid transformation. One standout innovation is photonic integrated circuits (PICs), which use light instead of electricity for calculations. Since over 90% of deep neural network operations involve matrix-vector multiplications, photonic chips offer a massive advantage: they produce minimal heat and consume far less energy. These chips have an Energy Per unit Area (EPA) that’s 4.1× lower than 28 nm CMOS chips and 14.77× lower than the latest 3 nm CMOS chips.

Another breakthrough is neuromorphic hardware, inspired by the human brain. Using Spiking Neural Networks (SNNs), these systems process information only when "spikes" occur, significantly reducing idle power consumption. Researchers have shown that photonic computing systems could outperform electronic processors in both speed and energy efficiency. For example, IBM Research has introduced co-packaged optics technology, which integrates optical connections directly into devices. This eliminates the need for energy-draining signal conversions between electrical and optical formats, improving bandwidth while cutting power usage for training large language models.

Manufacturing also plays a role in energy efficiency. Photonic chips require just two metal layers for routing, compared to 20 layers in 3 nm CMOS chips. This streamlined process reduces embodied carbon - the emissions from manufacturing - which accounts for 50–82% of modern cloud servers' carbon footprint. A great example is the Lightening-Transformer (LT) electro-photonic accelerator. Over a 10-year period, it’s projected to emit two orders of magnitude less carbon than NVIDIA’s A100 when running BERT-large inferences.

But hardware is only part of the equation. Efficient model design is equally critical.

Building Smaller, More Efficient AI Models

The AI industry is shifting its focus from "bigger is better" to "small is sufficient." This approach emphasizes selecting the smallest model that meets performance needs for a specific task. Why? Because as models grow larger, the performance gains diminish, while energy consumption rises exponentially.

A striking example: In 2024, Meta's Llama-13B outperformed the much larger GPT-3 (175 billion parameters) on several benchmarks, while consuming 24 times less energy. If this model selection strategy were applied globally, AI energy consumption could drop by 27.8% in 2025, saving approximately 31.9 TWh - the equivalent of five nuclear reactors' annual output.

Another avenue for efficiency is domain-specific models. Instead of deploying massive, general-purpose models for every task, organizations are creating specialized models for fields like healthcare or chemistry. These tailored models significantly reduce computational demands. Combining this with hardware-software co-design - using techniques like data pruning, low-precision formats, and algorithmic upgrades such as FlashAttention - further amplifies energy savings.

These advancements are paving the way for large-scale adoption of energy-efficient AI.

Organisations Implementing Energy-Efficient AI

Some of the world's leading tech companies are proving that energy-efficient AI is not just possible, but scalable.

Microsoft, for instance, introduced "Splitwise" technology in September 2025. This system splits large language model inference into two phases across different machines, achieving 2.35× higher throughput under the same power budget and cutting costs by 20% compared to traditional designs. The company also secured 19 gigawatts of renewable energy through global power purchase agreements in 2024.

Apple has been ahead of the curve, running its data centers on 100% renewable energy since 2014. Their energy mix includes biogas fuel cells, hydropower, solar, and wind.

Amazon made waves in October 2025 with "Project Rainier", a €10.4 billion AI data center campus in Indiana. Spanning 486,000 square meters, it uses custom "Trainium 2" chips from Annapurna Labs to run Anthropic's models more efficiently. Similarly, Meta’s open-source Llama-3-70B model allows thousands of organizations to avoid training similar models from scratch, spreading the environmental cost across the AI community.

These examples show how combining hardware innovation, smarter model design, and renewable energy can help achieve the AI industry's 2030 sustainability goals.

Practical Approaches to Reduce AI's Energy Footprint

Reducing AI's energy demands requires more than just better hardware and efficient models. Companies need actionable plans to meet climate goals by 2030. Below are some practical strategies to align AI operations with these objectives.

Public-Private Partnerships for Energy-Efficient AI

Collaboration between public and private sectors is a key driver for energy-efficient AI. For example, in May 2025, a partnership involving Emerald AI, Oracle Cloud, NVIDIA, Salt River Project, and the Electric Power Research Institute managed to cut power usage by 25% on a 256-GPU cluster during peak grid times using Emerald Conductor software.

Tech companies are also co-investing in Small Modular Reactor projects to accelerate the availability of low-emission electricity. Over 20 GW of such projects are being developed in the United States, with a target to deliver by 2030.

Government agencies like the U.S. National Science Foundation, the Department of Energy, and DARPA are funding research into neuromorphic chips and carbon footprint analyses. As the International Energy Agency (IEA) points out:

"Delivering the energy for AI, and seizing the benefits of AI for energy, will require even deeper dialogue and collaboration between the tech sector and the energy industry."

Using AI to Optimize Energy Systems

AI is proving to be a powerful tool for improving energy efficiency. Machine learning can enhance grid performance, potentially unlocking 175 GW of additional transmission capacity without building new infrastructure - a critical advantage when new power lines in advanced economies can take four to eight years to construct.

Microsoft's "Project Forge", launched in September 2025, is a prime example. This initiative uses AI to schedule energy-intensive tasks like training and inference during periods when renewable energy is abundant. By incorporating carbon-aware scheduling and GreenOps strategies, Microsoft has contracted 19 GW of renewable energy to meet its goal of 100% carbon-free electricity by 2030.

Companies can further reduce their environmental impact by adopting carbon-aware scheduling, which shifts energy-heavy processes to times or locations where renewable energy is more readily available. Combined with precise energy tracking, these strategies help organisations make meaningful progress toward sustainability.

Measuring and Reporting Energy Use in AI

Accurate energy measurement is essential for meaningful reductions. Many organisations currently focus only on active GPU power, ignoring the energy consumed by host systems, idle resources, and data centre overhead. This approach can underestimate total energy use by over 50%.

In May 2025, Google demonstrated the importance of comprehensive measurement. By accounting for all energy components, including cooling systems, they found that a single median text prompt used 0.24 Wh of energy and 0.26 mL of water. This led to a 33× reduction in energy use and a 44× cut in carbon emissions.

To ensure consistent reporting, organisations should adopt established frameworks like the Software Carbon Intensity Specification and the Azure Well-Architected Framework. Harmail Chatha, Senior Director of Cloud Operations at Nutanix, highlights the importance of granular tracking:

"Once you can measure the VM, then you got to get to the workload level. And that's when you're going to be able to make smart, intelligent decisions on what a workload consumption looks like."

Leadership accountability is equally important. Companies need to move beyond vague promises by conducting regular energy audits, providing transparent reports, and tying sustainability goals to executive performance metrics. Standardised measurement practices are critical to ensuring AI's advancements align with environmental objectives.

Conclusion: Meeting the 2030 Sustainability Target for AI

Reaching the goal of sustainable AI by 2030 demands a united effort across hardware advancements, energy efficiency, and clear accountability. Companies need to focus on hardware–software co-design to cut energy use and carbon emissions by over 800×. Shifting from traditional GPUs to technologies like neuromorphic chips and optical processors, while prioritising smaller, domain-specific models over massive general-purpose ones, will play a key role.

But hardware efficiency alone isn’t enough. The energy powering AI systems must also evolve. By 2030, renewable energy sources are expected to supply nearly 50% of the growing electricity needs of data centres. Still, this won’t fully address the challenge. The IEA emphasises the importance of energy availability:

"Affordable, reliable and sustainable electricity supply will be a crucial determinant of AI development, and countries that can deliver the energy needed at speed and scale will be best placed to benefit."

Another critical aspect is reducing embodied carbon. This involves creating modular, repairable hardware to extend the lifespan of equipment and decrease electronic waste. As Meta researcher Carole-Jean Wu puts it:

"We cannot reduce what cannot be measured. We need high fidelity tools to measure the lifecycle emissions of a computer system – both operational and embodied carbon footprint."

Finally, accountability is the glue that holds these efforts together. Transparent measurement tools and regular audits are essential to track both operational and embodied emissions. The technology to make this happen is already available - what’s missing is the collective determination to scale these solutions effectively before 2030.

FAQs

How can AI companies reduce energy use while achieving sustainability by 2030?

AI companies can cut down on energy use and work towards environmental goals by focusing on energy-efficient technologies and smarter strategies. For instance, fine-tuning data centers, adopting advanced silicon chips, and optimizing AI processes can make a big difference in reducing power consumption. Additionally, AI-powered energy management systems can enhance efficiency across operations and infrastructure.

Another key move is transitioning to renewable energy sources. By powering AI systems with cleaner energy, companies can align with ecological targets. Tools like sustainability metrics can help track and measure environmental impact, ensuring progress in balancing innovation with responsibility.

By merging smart tech, renewable energy, and clear tracking methods, AI companies can continue to grow responsibly while tackling climate challenges head-on by 2030.

What are the main technologies making AI systems more energy-efficient?

Energy efficiency in AI is seeing major advancements through hardware optimization, data center efficiency, and algorithmic improvements. Here's how these areas are shaping a more sustainable future:

1. Smarter Hardware

Microprocessors and GPUs are now designed to deliver more performance for each watt of energy they consume. This means they can handle complex AI tasks while using less power. Improvements in transistor density and the use of innovative cooling systems also make these components more efficient.

2. Greener Data Centers

Data centers, the backbone of AI operations, are becoming more eco-friendly. Many are now powered by renewable energy sources, like solar or wind. On top of that, advanced cooling systems are helping to cut down on the energy needed to keep these facilities operational.

3. Efficient Algorithms

AI algorithms are being fine-tuned to need less computational power. Techniques like model compression, pruning, and efficient training methods are reducing the energy demands of AI tasks. This not only makes AI systems faster but also aligns their development with environmental goals.

These combined efforts are setting the stage for AI systems that can grow rapidly while being more mindful of their environmental footprint. The goal? To achieve a balance between innovation and sustainability by 2030.

How does the energy consumption of AI models affect their environmental impact?

AI models come with a hefty energy bill. Training, deploying, and running these systems require substantial power, which has a direct effect on the environment. For example, training a single large AI model can produce hundreds of tonnes of CO₂ emissions - comparable to the carbon footprint of multiple long-haul flights. And it doesn’t stop there. By 2030, the energy consumption of data centres supporting AI systems is projected to double, potentially hitting 945 terawatt-hours annually. To put that in perspective, that's almost equivalent to the entire yearly electricity consumption of France.

To tackle these pressing concerns, the focus is shifting toward energy-efficient strategies. These include refining hardware designs, boosting the efficiency of data centres, and adopting sustainable AI benchmarks. By integrating renewable energy sources and exploring innovative approaches, the industry has the opportunity to cut down the environmental costs of AI while continuing to push boundaries in technological progress.